How analog in-memory computing could power the AI models of tomorrow

A new IBM Research paper on analog in-memory computing is featured on the cover of Nature Computational Science. The paper is one of a trio highlighting the future role of unconventional compute paradigms in AI, both in the cloud and on the edge.

In recent years, AI models have been getting more performant — but also much, much larger. The amount of memory occupied by neural network weights has been steadily growing, with some featuring 500 billion parameters, or even trillions. Performing inference on traditional computer architectures costs time and energy every time you move these weights to do computation on them. Analog in-memory computing, which enmeshes memory and compute, eliminates this bottleneck, saving time and energy while still delivering exceptional performance.

In a trio of new papers, IBM Research scientists showcase their work on scalable hardware with 3D analog in-memory architecture for large models, phase-change memory for compact edge-sized models, and algorithm innovations that accelerate transformer attention.

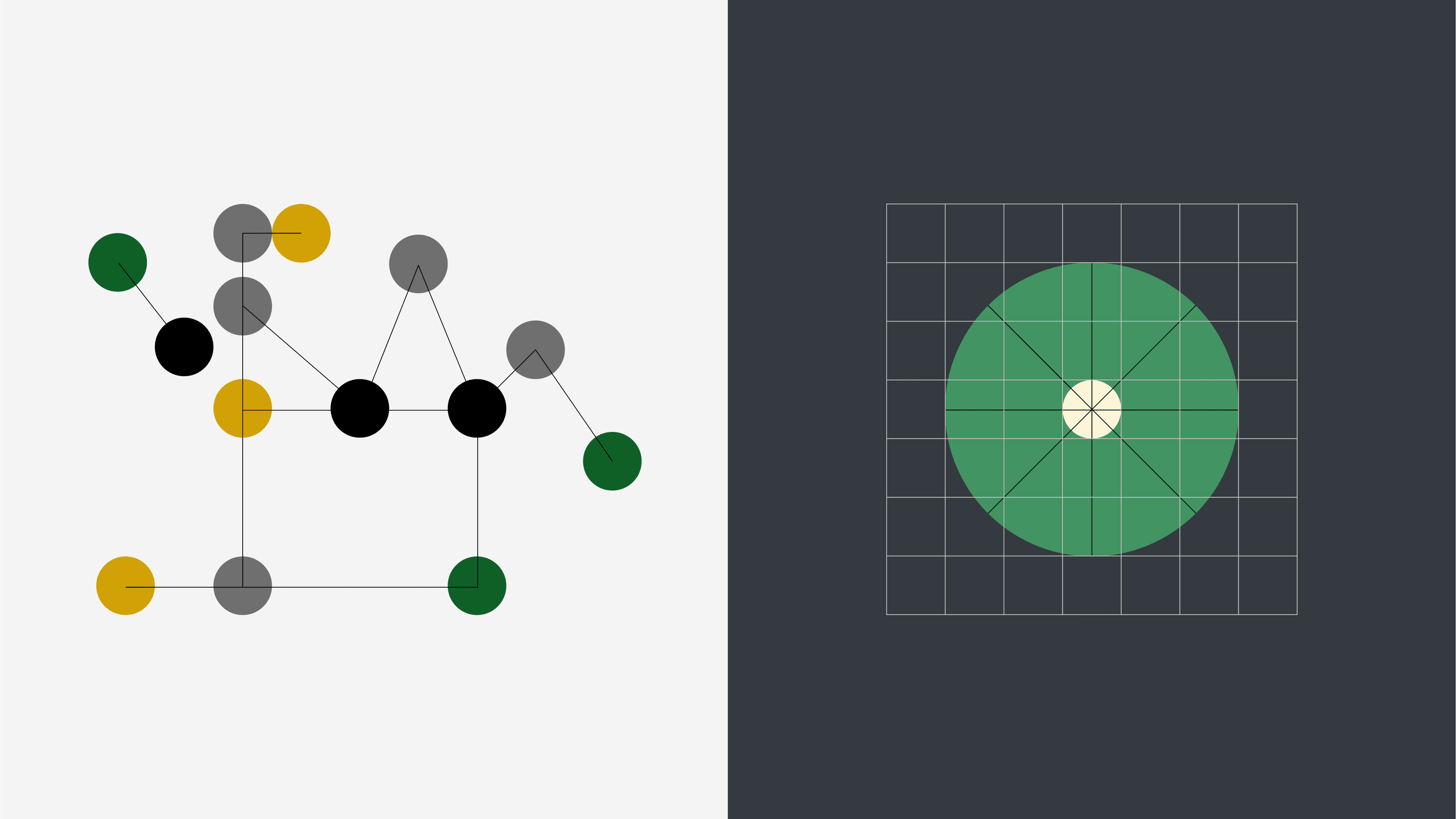

Chips based on analog in-memory computing are uniquely well suited for running cutting-edge mixture of experts (MoE) models, outperforming GPUs in multiple measures, according to a new study from a team at IBM Research. Their work, featured on the cover of the journal Nature Computational Science, shows that each expert in a layer of an MoE network can be mapped onto a physical layer of a 3D non-volatile memory in the unique brain-inspired chip architecture found in 3D-based analog in-memory computing chips. Through extensive numerical simulations and benchmarking, the team found that this mapping can achieve exceptional throughput and energy efficiency for running MoE models.

Along with two other new papers from IBM Research, it demonstrates the promise of in-memory computing for powering AI models with transformer architectures, both for edge and enterprise cloud applications. And according to these new papers, it’s nearing time to move this experimental technology out of the lab.

Layers of expertise

"Taking analog in-memory computing into the third dimension ensures that the model parameters of even large transformer architectures can be stored fully on-chip," said IBM Research scientist Julian Büchel, the lead author of the MoE paper which demonstrated that it’s beneficial to stack each of the MoE's 'experts' on top of each other in a 3D analog in-memory computing tile.

In an MoE model, specific layers of a neural network can be split into smaller layers. Each of these smaller layers is called an 'expert,' referring to the fact that it specializes in handling a subset of the data. When an input comes, a routing layer decides which expert (or experts) it will send the data to. When they ran two standard MoE models into their performance simulation tool, the simulated hardware outperformed state-of-the-art GPUs.

"That way, you can scale neural networks much better and deploy a large and very capable neural network with the computational footprint of a much smaller one," said IBM Research scientist Abu Sebastian, who leads the team behind the new papers. "As you can imagine, it also minimizes the amount of compute required for inference." Granite 1B and 3B use this model architecture to reduce latency.

In the new study, they used simulated hardware to map the layers of an MoE network onto analog in-memory computing tiles, each comprising multiple vertically stacked tiers. These tiers, containing the model weights, could be accessed individually. In the paper, the team depicts the layers as a high-rise office building with various floors, each containing different experts who can be called upon as needed.

Stacking the expert layers onto distinct tiers is intuitive, but the results of this strategy are what count. In their simulations, the 3D analog in-memory computing architecture achieved higher throughput, higher area efficiency, and higher energy efficiency when running an MoE model compared to commercially available GPUs running the same model. The advantage was largest when it came to energy efficiency, as the GPUs sacrifice a lot of time and energy when moving model weights between memory and compute — a problem that doesn’t exist in analog in-memory computing architectures.

According to IBM Research scientist, Hsinyu (Sidney) Tsai, who contributed to the work, this is a crucial step towards maturing 3D analog in-memory computing that can eventually accelerate enterprise AI computing in cloud environments.

Transformers at the edge

The second paper the team worked on was an accelerator architecture study, which was presented in December at an invited talk at the IEEE International Electron Devices Meeting. They demonstrated the feasibility of performing AI inference on edge applications with ultra-low-power devices using Phase-change memory (PCM) devices store model weights via the conductivity of a piece of chalcogenide glass. When more voltage passes through the glass, it is rearranged from a crystalline to an amorphous solid. This makes it less conductive, changing the value of matrix vector multiplication operations.phase change memory (PCM) for analog in-memory computing — bringing transformer models to the edge efficiently. In the team’s analysis, their neural processing units showed a competitive throughput for transformer models, with expected energy benefits.

"In edge devices, you have energy constraints, cost constraints, area constraints, and flexibility constraints," said IBM Research scientist Irem Boybat, who worked on the paper. "So we proposed this architecture, to address these requirements of edge AI." She and her colleagues outlined a neural processing unit with a mix of PCM-based analog accelerator and digital accelerator nodes, which work in concert to handle different levels of precision.

Because of this flexible architecture, a variety of neural networks could run on these devices, Boybat said. For the purposes of this paper, she and her colleagues explored a transformer model called MobileBERT that’s customized for mobile devices. The team's proposed neural processing unit performed better than an existing low-cost accelerator on the market, according to their own throughput benchmark, and it approached the performance of some high-end smartphones, as measured by a MobileBERT inference benchmark.

This work represents a step towards a future when analog in-memory compute devices could be mass produced cheaply, Sebastian said, storing all model weights on-chip for AI models. Such devices could form the basis of microcontrollers to help AI inference for edge applications, like cameras and automotive sensors for autonomous vehicles.

Transformers in analog

Last but not least, the researchers outlined the first deployment of a transformer architecture on an analog in-memory computing chip, comprising every matrix vector multiplication operation involving static model weights. Compared to a scenario where all operations are carried out in floating point, it performed within 2% accuracy on a benchmark called the Long Range Arena, which tests accuracy on long sequences. The results appeared in the journal Nature Machine Intelligence.

Bigger picture, these experiments showed that it’s possible to accelerate the attention mechanism with analog in-memory computing — a major bottleneck for transformers, said IBM Research scientist Manuel Le Gallo-Bourdeau. "The attention computation in transformers has to be done, and that’s not something that can be straightforwardly accelerated in analog," he added. The obstacle lies with the values that need to be calculated in the attention mechanism. They’re dynamically changing, which would require constant re-programming of the analog devices — an impractical goal in terms of energy and endurance.

To overcome that barrier, they used a mathematical technique called kernel approximation to perform nonlinear functions with their experimental analog chip. This development is important, Sebastian said, because it was previously believed that this circuit architecture could only handle linear functions. This chip uses a brain-inspired design that stores model weights in phase-change memory devices, arrayed in crossbars like the system simulated in the MoE experiments.

"Attention compute is a nonlinear function, a very unpleasant mathematical operation for any AI accelerators, but particularly for analog in-memory computing accelerators," said Sebastian. "But this proves we can do it by using this trick, and we can also improve the overall system efficiency."

The trick, kernel approximation, works around the need for a nonlinear function by projecting inputs into a higher-dimensional space using randomly sampled vectors, then computing the dot product in the resulting higher dimensional space. Kernel approximation is a generic technique that can apply to a range of scenarios, not just on systems using analog in-memory computing, but it happens that it works very well for that purpose.

"These papers present critical breakthroughs for a future where modern AI workloads could run on both the cloud and the edge," commented IBM Fellow, Vijay Narayanan.

Notes

- Note 1: Phase-change memory (PCM) devices store model weights via the conductivity of a piece of chalcogenide glass. When more voltage passes through the glass, it is rearranged from a crystalline to an amorphous solid. This makes it less conductive, changing the value of matrix vector multiplication operations. ↩︎

Related posts

- ReleaseKim Martineau

Boost your tools: Introducing ToolOps, the tool lifecycle extension in ALTK

Technical noteHimanshu Gupta, Jim Laredo, Neelamadhav Gantayat, Jayachandu Bandlamudi, Prerna Agarwal, Sameep Mehta, Renuka Sindhgatta, Ritwik Chaudhuri, and Rohith VallamIBM Granite tops Stanford’s list as the world’s most transparent model

NewsPeter HessTeams of agents can take the headaches — and potential costs — out of finding IT bugs

ReleaseMike Murphy